#reduce api call time

Explore tagged Tumblr posts

Text

Boom badabim bada pow

Welcome to septuplet au! Also now called injuries matter au

Where i make the elements into siblings but despite this being done but a bunch of people let me make this my way or-

Maybe a similar way ig

What you see above is Cahaya and daun

There aint no way Cahaya will become just fine after being hit on the head and the abdomen or gut by a giant hammer and flew thhrough metal floors? Ceilings? (I know this happened to boi but dont drag him into this. this is also a septuplet au he aint included but dont u dare get ideas👹👹👹)

And he got thrown to space twice, very high or low temperature causes dead cells and stuff (welcome decayed skin)

So- yeah, he got into a longer coma so to speak, perhaps the actual logic into this is death but lets not get into that and just do 2-3 months folks

According to google said symptoms for frontal lobe damage are:

Weakness on one side of the body or one side of the face, Falling, Inability to solve problems or organize tasks, Reduced creativity, Impaired judgment, Reduced sense of taste or smell, Depression, Difficulty controlling emotions, Changes in behavior, Low motivation, Poor attention span, being easily distracted, Reduced or increased sexual interest, Odd sexual habits, Impulsive or risky behavior, Trouble with communication

And vocal chords get damaged with cold temperature so-

Kk, hes not completely incapable as he heals overtime(note: not completely), but trauma with the dark :> oh how will that help if he doesnt sleep well :)

(he uses sign language if he needs to stop speaking)

ALRIGHT moving on to air

Dude got his arm melted off by roktaroka i think thats his name, which very much hurt because lava, is still slow, which meant it was slow and painful, and with it being put on ice immediately (yes this situation summoned ais) it left quite a huge mark

And he also cant keep the ice hand for so long so bros ambidextrous just uses left the most now, might have trauma from long distance attacks and perhaps hot temperatures, so you could say api is trying his best for air and that goes the same for air to api

LETS GO DAUN

Yes he gains a type of inferiority complex so he has to be included and does his best, but not only that, he gains lightning scars from kirana, on his hands, so he has trouble controlling those hands

They will randomly end up shaking sometimes, and randomly drop as in become paralyze, this resulted to a lot of things to become broken, and this is where daun feels bad for gaining this problem, and at times might hurt like theres still lightning striking him

OF COURSE petir feels bad and blames himself for getting caught like that haha-

Bro also got caught or kidnapped three times he blames himself for repeating that situation a lot.

ANGIN well, i know the fandom makes him love yaya's cookies, but imma be honest here, those things basically drugged him into drugging everyone, he for sure gained fear of those cookies. That made him out of it

Buuuuut what if as well he has a temptation to eat it at times, despite his whole mind not wanting to, so when he does end up eating it he becomes nauseous and vomits :)

API doesnt like seeing fear in peoples faces but that never washes away bc of his anger issues that causes people to be afraid so lets go low self esteem-

Tanah wants his brothers to be more better so thats why he shoves all responsibility onto himself but that also causes trauma!!!

Hes so afraid of losing them that he became very strict which causes to some arguments but he also tries his very best to look tough and be more capable, but there are times where its obvious like with movie 2, api and air try their best to help him despite him pushing them away and being in denial. Gopal was the one who did end up calming everyone down

Angin is the one who sees him always and is always the one who conforts him

Petir feels even more down knowing that hes the oldest and that he should be the one to take that burden of responsibility.

And yeah i might add more but who knows

#boboiboy#boboiboy galaxy#rambles#thoughts#bbb septuplet au#boboiboy petir#boboiboy angin#boboiboy tanah#boboiboy api#boboiboy air#boboiboy daun#boboiboy cahaya#yes trauma#bbb injuries matter au

57 notes

·

View notes

Text

How Enterprises Use Voice APIs for Call Routing and IVR Automation

Enterprises today handle thousands of customer calls every day. To manage these efficiently, many are turning to voice APIs. These tools help businesses automate call routing and interactive voice response (IVR) systems.

What Are Voice APIs?

Voice APIs are software interfaces that allow developers to build voice-calling features into apps or systems. These APIs can trigger actions like placing calls, receiving them, or converting speech to text. For enterprises, voice APIs make it easy to integrate intelligent call handling into their workflow.

Smarter Call Routing

Call routing directs incoming calls to the right agent or department. With voice APIs, this process becomes dynamic and rules based.

For example, a customer calling from a VIP number can be routed directly to a premium support team. APIs allow routing rules based on caller ID, time of day, location, or even previous interactions. This reduces wait times and improves customer satisfaction.

Automated IVR Systems

Interactive Voice Response (IVR) lets callers interact with a menu system using voice or keypad inputs. Traditional IVR systems are rigid and often frustrating.

Voice APIs enable smarter, more personalized IVR flows. Enterprises can design menus that adapt in real time. For instance, returning callers may hear different options based on their past issues. With speech recognition, users can speak naturally instead of pressing buttons.

Scalability and Flexibility

One major benefit of using voice API is scalability. Enterprises don’t need physical infrastructure to manage call volume. The cloud-based nature of voice APIs means businesses can handle spikes in calls without losing quality.

Also, changes to call flows can be made quickly. New routing rules or IVR scripts can be deployed without touching hardware. This agility is crucial in fast-moving industries.

Enhanced Analytics and Integration

Voice APIs also provide detailed data. Enterprises can track call duration, drop rates, wait times, and common IVR paths. This data helps optimize performance and identify pain points.

Moreover, APIs easily integrate with CRMs, ticketing systems, and analytics tools. This ensures a seamless connection between calls and other business processes.

Final Thoughts

Voice APIs are transforming how enterprises manage voice communications. From intelligent call routing to adaptive IVR systems, the benefits are clear. Enterprises that adopt these tools gain speed, efficiency, and better customer experience, and that too without a lot of effort.

4 notes

·

View notes

Text

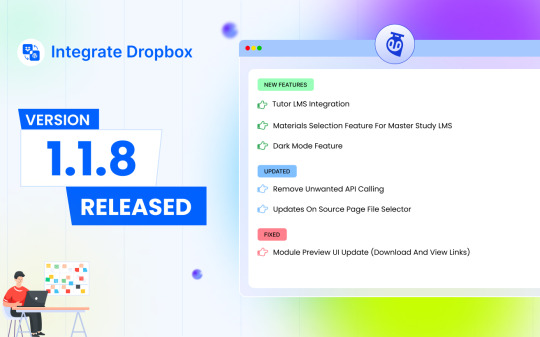

🎉 Exciting New Release: Integrate Dropbox v1.1.8 🎉

We are thrilled to announce the launch of Integrate Dropbox version 1.1.8—bringing powerful new features, critical updates, and key fixes to make your file management experience smoother and more efficient! 🚀

🔥 What’s New?

Tutor LMS Integration: Supercharge your eLearning platform! Now you can seamlessly link Dropbox to Tutor LMS, providing educators and learners with streamlined access to course materials and file storage.

Materials Selection for MasterStudy LMS: Tailor and manage course content effortlessly by integrating Dropbox directly with MasterStudy LMS—giving you full control over what materials are shared in your courses.

Dark Mode: We heard you! Introducing the much-anticipated dark mode, offering a sleek, eye-friendly interface for those late-night working sessions. 🌙

🛠️ What’s Improved?

Removed Unwanted API Calls: We’ve cleaned up unnecessary API calls, ensuring faster performance and reduced load times, giving you a more efficient user experience. ⚡

Enhanced Source Page File Selector: Searching for files has never been easier—an updated and more intuitive file selector on the source page improves navigation and file management.

🛑 Fixed:

Module Preview UI Update: We’ve refined the UI for module preview, fixing download and view links to ensure a smooth, hassle-free user experience. 📂

Upgrade to v1.1.8 today to enjoy these awesome new features and updates, whether you're using Dropbox for learning management, file storage, or collaboration. Let's make your workflow smarter and more efficient! 💼💡

#wordpress#dropbox#IntegrateDropbox#LMSIntegration#TutorLMS#MasterStudyLMS#DarkMode#FileManagement#EdTech#TechRelease#eLearning

3 notes

·

View notes

Text

This day in history

NEXT WEEKEND (June 7–9), I'm in AMHERST, NEW YORK to keynote the 25th Annual Media Ecology Association Convention and accept the Neil Postman Award for Career Achievement in Public Intellectual Activity.

#20yrsago Adrian Mole: the text-adventure game https://web.archive.org/web/20040603000808/www.c64unlimited.net/games/s/Secret Diary of Adrian Mole/Secret Diary of Adrian Mole.htm

#15yrsago Profile of the lock-hacker who bumped the “unbumpable” Medeco lock https://web.archive.org/web/20090525195144/http://www.wired.com/techbiz/people/magazine/17-06/ff_keymaster

#15yrsago Devilish Devenish-Phibbs bench-plaques around London https://web.archive.org/web/20090529001109/https://www.timeout.com/london/big-smoke/blog/7810/london-s_benches_and_the_strange_case_of_croy_devenish-phibbs.html

#10yrsago Women who’d never seen their vulvas given a mirror and a modesty screen https://www.youtube.com/watch?v=eC4dVe8pIGY

#10yrsago Engineering our way out of mass surveillance https://web.archive.org/web/20140603175909/smarimccarthy.is/blog/2014/05/28/engineering-our-way-out-of-fascism/

#10yrsago Harvard Bluebook: more threats to those who would cite the law https://web.archive.org/web/20140601042919/http://tinyletter.com/5ua/letters/a-cite-to-behold-5-useful-articles-vol-1-issue-10

#5yrsago Public outcry has killed an attempt turn clickthrough terms of service into legally binding obligations (for now) https://www.consumerfinancemonitor.com/2019/05/22/ali-annual-meeting-ends-with-uncertain-future-for-restatement-of-the-law-consumer-contracts/

#5yrsago Nobel-winning economist Joe Stiglitz calls neoliberalism “a failed ideology” and sketches out a “progressive capitalism” to replace it https://www.commondreams.org/views/2019/05/30/after-neoliberalism

#5yrsago Google’s API changes mean only paid enterprise users of Chrome will be able to access full adblock https://9to5google.com/2019/05/29/chrome-ad-blocking-enterprise-manifest-v3/

#5yrsago Chase credit cards quietly reintroduce the binding arbitration clauses they were forced to eliminate a decade ago https://www.fastcompany.com/90357331/chase-adds-forced-arbitration-clause-to-slate-credit-cards

#5yrsago Ted Cruz backs AOC’s call for a lifetime ban on lobbying by former Congressjerks https://www.nakedcapitalism.com/2019/05/aoc-calls-for-ban-on-revolving-door-as-study-shows-2-3-of-recently-departed-lawmakers-now-lobbyists.html

#5yrsago To reduce plastic packaging, ship products in solid form https://www.treehugger.com/incredibly-simple-solution-plastic-packaging-waste-4857081

#5yrsago For the first time since the 70s, New York State is set to enshrine sweeping tenants’ protections https://web.archive.org/web/20190531131740/https://www.thenation.com/article/universal-rent-regulation-new-york/

#1yrago To save the news, ban surveillance ads https://pluralistic.net/2023/05/31/context-ads/#class-formation

5 notes

·

View notes

Text

Do You Want Some Cookies?

Doing the project-extrovert is being an interesting challenge. Since the scope of this project shrunk down a lot since the first idea, one of the main things I dropped is the use of a database, mostly to reduce any cost I would have with hosting one. So things like authentication needs to be fully client-side and/or client-stored. However, this is an application that doesn't rely on JavaScript, so how I can store in the client without it? Well, do you want some cookies?

Why Cookies

I never actually used cookies in one of my projects before, mostly because all of them used JavaScript (and a JS framework), so I could just store everything using the Web Storage API (mainly localstorage). But now, everything is server-driven, and any JavaScript that I will add to this project, is to enhance the experience, and shouldn't be necessary to use the application. So the only way to store something in the client, using the server, are Cookies.

TL;DR Of How Cookies Work

A cookie, in some sense or another, is just an HTTP Header that is sent every time the browser/client makes a request to the server. The server sends a Set-Cookie header on the first response, containing the value and optional "rules" for the cookie(s), which then the browser stores locally. After the cookie(s) is stored in the browser, on every subsequent request to the server, a Cookie header will be sent together, which then the server can read the values from.

Pretty much all websites use cookies some way or another, they're one of the first implementations of state/storage on the web, and every browser supports them pretty much. Also, fun note, because it was one of the first ways to know what user is accessing the website, it was also heavy abused by companies to track you on any website, the term "third-party cookie" comes from the fact that a cookie, without the proper rules or browser protection, can be [in summary] read from any server that the current websites calls. So things like advertising networks can set cookies on your browser to know and track your profile on the internet, without you even knowing or acknowledging. Nowadays, there are some regulations, primarily in Europe with the General Data Privacy Regulation (GDPR), that's why nowadays you always see the "We use Cookies" pop-up in websites you visit, which I beg you to actually click "Decline" or "More options" and remove any cookie labeled "Non-essential".

Small Challenges and Workarounds

But returning to the topic, using this simple standard wasn't so easy as I thought. The code itself isn't that difficult, and thankfully Go has an incredible standard library for handling HTTP requests and responses. The most difficult part was working around limitations and some security concerns.

Cookie Limitations

The main limitation that I stumbled was trying to have structured data in a cookie. JSON is pretty much the standard for storing and transferring structured data on the web, so that was my first go-to. However, as you may know, cookies can't use any of these characters: ( ) < > @ , ; : \ " / [ ] ? = { }. And well, when a JSON file looks {"like":"this"}, you can think that using JSON is pretty much impossible. Go's http.SetCookie function automatically strips " from the cookie's value, and the other characters can go in the Set-Cookie header, but can cause problems.

On my first try, I just noticed about the stripping of the " character (and not the other characters), so I needed to find a workaround. And after some thinking, I started to try implementing my own data structure format, I'm learning Go, and this could be an opportunity to also understand how Go's JSON parsing and how mostly struct tags works and try to implement something similar.

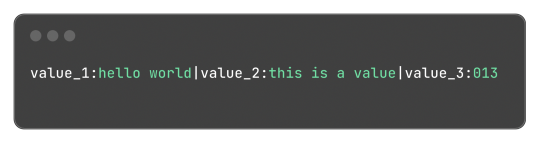

My idea was to make something similar to JSON in one way or another, and I ended up with:

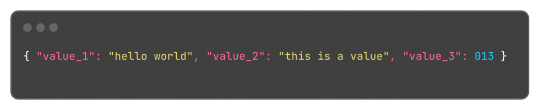

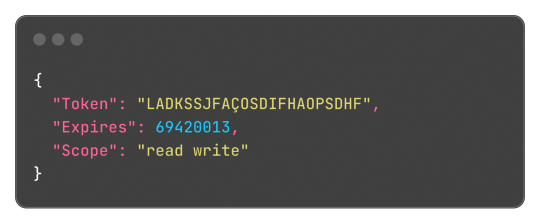

Which, for reference, in JSON would be:

This format is something very easy to implement, just using strings.Split does most of the job of extracting the values and strings.Join to "encode" the values back. Yes, this isn't a "production ready" format or anything like that, but it is hacky and just a small fix for small amounts of structured data.

Go's Struct Tags

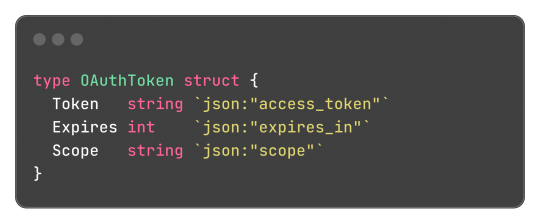

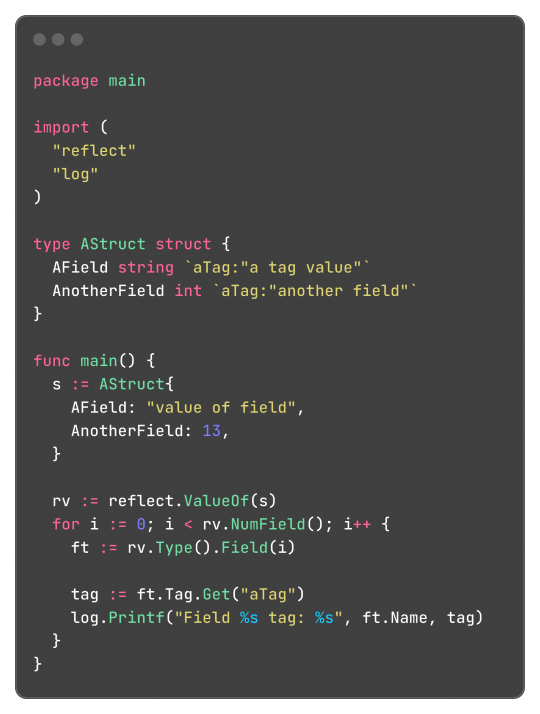

Go has an interesting and, to be honest, very clever feature called Struct Tags, which are a simple way to add metadata to Structs. They are simple strings that are added to each field and can contain key-value data:

Said metadata can be used by things such the encoding/json package to transform said struct into a JSON object with the correct field names:

Without said tags, the output JSON would be:

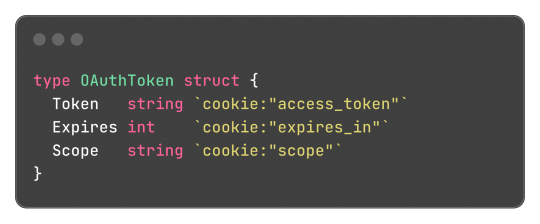

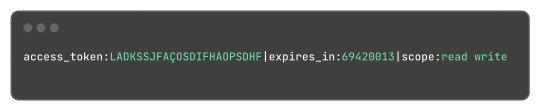

This works both for encoding and decoding the data, so the package can correctly map the JSON field "access_token" to the struct field "Token".

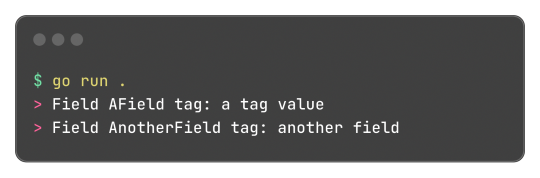

And well, these tokens aren't limited or some sort of special syntax, any key-value pair can be added and accessed by the reflect package, something like this:

Learning this feature and the reflect package itself, empowered me to do a very simple encoding and decoding of the format where:

Can be transformed into:

And that's what I did, and the [basic] implementation source code just has 150 lines of code, not counting the test file to be sure it worked. It works, and now I can store structured data in cookies.

Legacy in Less Than 3 Weeks

And today, I found that I can just use url.PathEscape, and it escapes all ( ) < > @ , ; : \ " / [ ] ? = { } characters, so it can be used both in URLs and, surprise, cookie values. Not only that, but something like base64.URLEncoding would also work just fine. You live, and you learn y'know, that's what I love about engineering.

Security Concerns and Refactoring Everything

Another thing that was a limitation and mostly worry about me, is storing access tokens on cookies. A cookie by default isn't that secure, and can be easily accessed by JavaScript and browser extensions, there are ways to block and secure cookies, but even then, you can just open the developer tools of the browser and see them easily. Even though the only way to something malicious end up happening with these tokens are if the actual client end up being compromised, which means the user has bigger problems than just a social media token being leaked, it's better to try preventing these issues nonetheless (and learn something new as always).

The encryption and decryption part isn't so difficult, Go already provides packages for encryption under the crypto module. So I just implemented an encryption that cyphers a string based on a key environment variable, which I will change every month or so to improve security even more.

Doing this encryption on every endpoint would be repetitive, so adding a middleware would be a solution. I already made a small abstraction over the default Go's router (the DefaultMuxServer struct), which I'm going to be honest, wasn't the best abstraction, since it deviated a lot from Go's default HTTP package conventions. This deviation also would difficult the implementation of a generic middleware that I could use in any route or even any function that handles HTTP requests, a refactor was needed. Refactoring made me end up rewriting a lot of code and simplifying a lot of the code from the project. All routes now are structs that implement the http.Handler interface, so I can use them outside the application router and test them if needed; The router ends up being just a helper for having all routes in a struct, instead of multiple mux.HandleFunc calls in a function, and also handles adding middlewares to all routes; Middlewares end up being just a struct that can return a wrapped HandlerFunc function, which the router calls using a custom/wrapped implementation of the http.ResponseWriter interface, so middlewares can actually modify the content and headers of the response. The refactor had 1148 lines added, and 524 removed, and simplified a lot of the code.

For the encryption middleware, it encrypts all cookie values that are set in the Set-Cookie header, and decrypts any incoming cookie. Also, the encrypted result is encoded to base64, so it can safely be set in the Set-Cookie header after being cyphered.

---

And that's what I worked in around these last three days, today being the one where I actually used all this functionality and actually implemented the OAuth2 process, using an interface and a default implementation that I can easily reimplement for some special cases like Mastodon's OAuth process (since the token and OAuth application needs to be created on each instance separately). It's being interesting learning Go and trying to be more effective and implement things the way the language wants. Everything is being very simple nonetheless, just needing to align my mind with the language mostly.

It has been a while since I wrote one of these long posts, and I remembered why, it takes hours to do, but it's worth the work I would say. Unfortunately I can't write these every day, but hopefully they will become more common, so I can log better the process of working on the projects. Also, for the 2 persons that read this blog, give me some feedback! I really would like to know if there's anything I could improve in the writing, anything that ended up being confusing, or even how I could write the image description for the code snippets, I'm not sure how to make them more accessible for screen reader users.

Nevertheless, completing this project will also help to make these post, since the conversion for Markdown to Tumblr's NPF in the web editor sucks ass, and I know I can do it better.

2 notes

·

View notes

Text

Wish List For A Game Profiler

I want a profiler for game development. No existing profiler currently collects the data I need. No existing profiler displays it in the format I want. No existing profiler filters and aggregates profiling data for games specifically.

I want to know what makes my game lag. Sure, I also care about certain operations taking longer than usual, or about inefficient resource usage in the worker thread. The most important question that no current profiler answers is: In the frames that currently do lag, what is the critical path that makes them take too long? Which function should I optimise first to reduce lag the most?

I know that, with the right profiler, these questions could be answered automatically.

Hybrid Sampling Profiler

My dream profiler would be a hybrid sampling/instrumenting design. It would be a sampling profiler like Austin (https://github.com/P403n1x87/austin), but a handful of key functions would be instrumented in addition to the sampling: Displaying a new frame/waiting for vsync, reading inputs, draw calls to the GPU, spawning threads, opening files and sockets, and similar operations should always be tracked. Even if displaying a frame is not a heavy operation, it is still important to measure exactly when it happens, if not how long it takes. If a draw call returns right away, and the real work on the GPU begins immediately, it’s still useful to know when the GPU started working. Without knowing exactly when inputs are read, and when a frame is displayed, it is difficult to know if a frame is lagging. Especially when those operations are fast, they are likely to be missed by a sampling debugger.

Tracking Other Resources

It would be a good idea to collect CPU core utilisation, GPU utilisation, and memory allocation/usage as well. What does it mean when one thread spends all of its time in that function? Is it idling? Is it busy-waiting? Is it waiting for another thread? Which one?

It would also be nice to know if a thread is waiting for IO. This is probably a “heavy” operation and would slow the game down.

There are many different vendor-specific tools for GPU debugging, some old ones that worked well for OpenGL but are no longer developed, open-source tools that require source code changes in your game, and the newest ones directly from GPU manufacturers that only support DirectX 12 or Vulkan, but no OpenGL or graphics card that was built before 2018. It would probably be better to err on the side of collecting less data and supporting more hardware and graphics APIs.

The profiler should collect enough data to answer questions like: Why is my game lagging even though the CPU is utilised at 60% and the GPU is utilised at 30%? During that function call in the main thread, was the GPU doing something, and were the other cores idling?

Engine/Framework/Scripting Aware

The profiler knows which samples/stack frames are inside gameplay or engine code, native or interpreted code, project-specific or third-party code.

In my experience, it’s not particularly useful to know that the code spent 50% of the time in ceval.c, or 40% of the time in SDL_LowerBlit, but that’s the level of granularity provided by many profilers.

Instead, the profiler should record interpreted code, and allow the game to set a hint if the game is in turn interpreting code. For example, if there is a dialogue engine, that engine could set a global “interpreting dialogue” flag and a “current conversation file and line” variable based on source maps, and the profiler would record those, instead of stopping at the dialogue interpreter-loop function.

Of course, this feature requires some cooperation from the game engine or scripting language.

Catching Common Performance Mistakes

With a hybrid sampling/instrumenting profiler that knows about frames or game state update steps, it is possible to instrument many or most “heavy“ functions. Maybe this functionality should be turned off by default. If most “heavy functions“, for example “parsing a TTF file to create a font object“, are instrumented, the profiler can automatically highlight a mistake when the programmer loads a font from disk during every frame, a hundred frames in a row.

This would not be part of the sampling stage, but part of the visualisation/analysis stage.

Filtering for User Experience

If the profiler knows how long a frame takes, and how much time is spent waiting during each frame, we can safely disregard those frames that complete quickly, with some time to spare. The frames that concern us are those that lag, or those that are dropped. For example, imagine a game spends 30% of its CPU time on culling, and 10% on collision detection. You would think to optimise the culling. What if the collision detection takes 1 ms during most frames, culling always takes 8 ms, but whenever the player fires a bullet, the collision detection causes a lag spike. The time spent on culling is not the problem here.

This would probably not be part of the sampling stage, but part of the visualisation/analysis stage. Still, you could use this information to discard “fast enough“ frames and re-use the memory, and only focus on keeping profiling information from the worst cases.

Aggregating By Code Paths

This is easier when you don’t use an engine, but it can probably also be done if the profiler is “engine-aware”. It would require some per-engine custom code though. Instead of saying “The game spent 30% of the time doing vector addition“, or smarter “The game spent 10% of the frames that lagged most in the MobAIRebuildMesh function“, I want the game to distinguish between game states like “inventory menu“, “spell targeting (first person)“ or “switching to adjacent area“. If the game does not use a data-driven engine, but multiple hand-written game loops, these states can easily be distinguished (but perhaps not labelled) by comparing call stacks: Different states with different game loops call the code to update the screen from different places – and different code paths could have completely different performance characteristics, so it makes sense to evaluate them separately.

Because the hypothetical hybrid profiler instruments key functions, enough call stack information to distinguish different code paths is usually available, and the profiler might be able to automatically distinguish between the loading screen, the main menu, and the game world, without any need for the code to give hints to the profiler.

This could also help to keep the memory usage of the profiler down without discarding too much interesting information, by only keeping the 100 worst frames per code path. This way, the profiler can collect performance data on the gameplay without running out of RAM during the loading screen.

In a data-driven engine like Unity, I’d expect everything to happen all the time, on the same, well-optimised code path. But this is not a wish list for a Unity profiler. This is a wish list for a profiler for your own custom game engine, glue code, and dialogue trees.

All I need is a profiler that is a little smarter, that is aware of SDL, OpenGL, Vulkan, and YarnSpinner or Ink. Ideally, I would need somebody else to write it for me.

6 notes

·

View notes

Text

The Ecto Moog Vanilla Experience

Updated for 1.20.4

I’m the unwilling user of a MacBook Air, and I’m also someone with strong (picky) preferences for ✨vibes✨, and so to play Minecraft casually on my laptop, I’ve had to jump through several hoops to achieve what I would consider a good vanilla experience.

At this point though, I’ve put probably too much time and effort into it, and so I thought to justify that I’d make a beginner's guide to all the mods, resource packs and game options I use, as of February 2024 (1.20.4). 👇

I will attach a hyperlink to any resources I mention :)

Mods

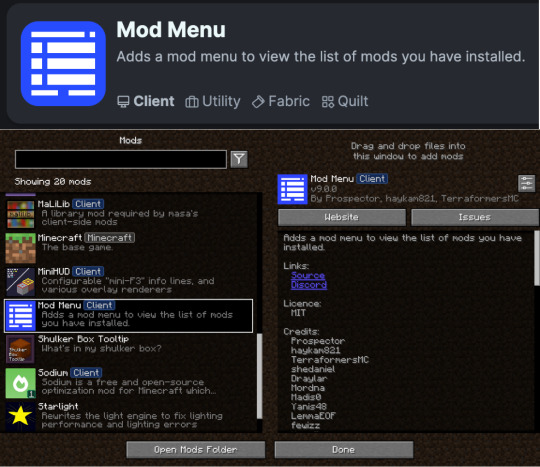

Ok so to start off, yes, I technically I don't actually play true vanilla Minecraft, but the mods I have installed are all client-side, and for the purpose of improving or optimising the vanilla experience. You can find me and a list of (almost) all these mods on Modrinth.

Fabric & Modrinth (Intro)

To mod the game, I use Fabric. I know that Forge has had some… drama? recently, and I’m gonna be honest I’ve never tried Quilt (I will at some point), but Fabric is super widespread, tons of mods use it so it works just fine for me 😁. When I’m looking for mods, I really prefer to use Modrinth, just because I can follow everything and it’s a really well designed website. (I also tried their launcher but I think it needs a little work?)

As part of Fabric, I do have the Fabric API in my mods folder.

Performance & Optimisation

I use these mods are to make the game run better. Minecraft is notorious for being very poorly optimised, and these mods have been made by a ton of very talented people to fix that.

Sodium

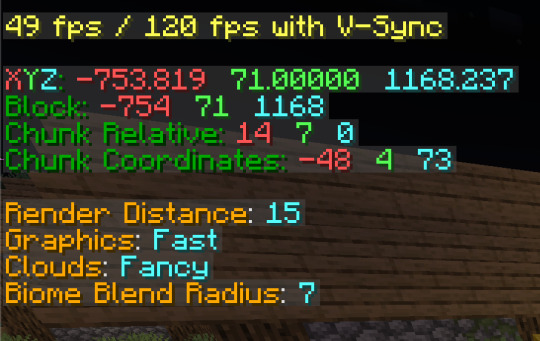

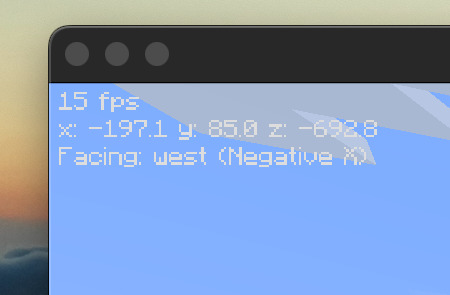

JellySquid’s Sodium is the hot optimisation mod right now for rendering and graphical fixes. It doesn’t have the cosmetic features of Optifine, like dynamic lighting or zoom, but it’s super compatible and gives me great performance. Combined with my other mods, at a 15 chunk render distance in the overworld, I averaged around 50 fps. For a MacBook, that's not bad at all.

To accompany Sodium I've also got Indium, an add-on that provides support for the Fabric Rendering API, which is required for mods that use advanced rendering effects, and Reese's Sodium Options, which adds a better options screen for Sodium’s video settings- it looks better basically.

Lithium

Lithium is a general purpose optimisation mod that improves systems like game physics, mob AI, block ticking, etc without changing vanilla mechanics.

Starlight

Starlight rewrites the entire lighting engine to fix performance and errors. Made for bigger servers, but helpful for client users. I think this is in place of Phosphor.

Dynamic FPS

Dynamic FPS can reduce the game’s FPS when it’s just running in the background- useful for a laptop.

Some more specific optimisation mods:

Entity Culling avoids rendering entities that aren’t directly in your field of view, which is much more thorough than the vanilla approach.

Ferrite Core reduces the memory usage of Minecraft in a ton of different ways.

Immediately Fast optimises how things like entities, particles, text, GUI are rendered, by using “a custom buffer implementation which batches draw calls and uploads data to the GPU in a more efficient way.”

I'm not a programmer so I can’t really explain what Krypton does, something to do with networking stacks, but I know it optimises.. things, lowers server CPU usage and reduces memory usage. I’m barely following along with a lot of these mods.

Fabric Language Kotlin is a dependancy that enables the use of the Kotlin programming language for other Fabric mods. To be honest, I’ve forgotten what mod needs this but I don’t want to delete it, just in case.

Experience

These mods aren’t necessarily about performance, but they do enhance the game in a vanilla kind of way, in my opinion. Some of these could be up for debate but I do think they compliment the base game.

Lamb Dynamic Lights

Lamb Dynamic Lights is a simple but thorough dynamic lighting mod that adds light-emitting handheld items, dropped items and entities.

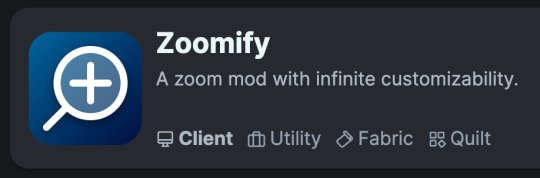

Zoomify

Zoomify is a super configurable zoom mod. Maybe this is just because of Optifine’s influence, but i think they should add zoom to the base game. At least I feel cool using it 😎

To make Zoomify work, you’ll need, YetAnotherConfigLib, a config library that fills in a couple of holes.

Better F3

Better F3… makes the F3 menu better. There’s the option to customise literally everything, you can colour code, shift and delete parts of the menu depending on your use case, it’s less insane and looks a whole lot better.

MiniHUD

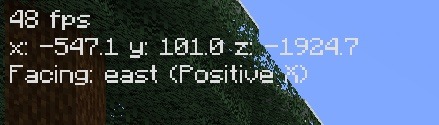

MiniHUD allows you to display customisable F3 lines on the main screen, along with several client-side overlay renderers. I just use it for the mini-F3, and I have my FPS, coords and cardinal direction in the top left corner. It’s honestly more helpful than you’d assume.

MiniHUD requires MaLiLib (masa’s Litemod Library) which is a library mod for mods made by masa and others.

ModMenu

ModMenu adds an in-game menu where you can view the mods you’re running and access their details and setting menus.

Shulker Box Tool Tip

Shulker Box Tool Tip adds a preview of the inside of a Shulker box when it’s in your inventory.

Some more specific experience mods:

Bobby is a rendering mod that allows the player to render more chunks than a server’s fixed distance, by loading in previously generated chunks saved client-side. You can also render them straight from a singleplayer world file. To be honest, I don’t often run into this issue but it’s helpful to have lol.

Cloth Config API adds a config screen for mods in-game.

Iris is a mod that makes shaders super easy, and compatible with Sodium. It's down here because I don’t use shaders often, but it’s essential if you do.

A lot of these mods are subject to change as I find better or updated alternatives, and I'm always on the lookout for more 😁

Resource Packs

For vanilla Minecraft, I actually only use one resource pack, but if you’re familiar with Vanilla Tweaks, you’ll understand why this deserves its own category.

This resource pack allows you to pick which small changes you want to add, and a lot of them are really cool. Some of my favourite tweaks are:

Dark UI

Quieter Nether Portals

Circular Sun and Moon

Numbered Hotbar

Golden Crown

The other tweaks I have are: Classic Minecraft Logo, Lower Warped Nylium, Lower Snow, Lower Crimson Nylium, Lower Podzol, Lower Paths, Lower Grass, Wither Hearts, Ping Color Indicator, Borderless Glass, Lower Fire, Lower Shield, Transparent Pumpkin, Noteblock Banners, Quieter Minecarts, Variated Unpolished Stones, Variated, Terracotta, Variated Stone, Variated Logs, Variated Mushroom Blocks, Variated End Stone, Variated Gravel, Variated Mycelium, Variated Planks, Variated Bricks, Random Moss Rotation, Variated Cobblestone, Variated Grass, Random Coarse Dirt Rotation, Variated Dirt, Darker Dark Oak Leaves, Shorter Tall Grass, Circle Log Tops, Twinkling Stars, Accurate Spyglass, Unique Dyes, Animated Campfire Item, Red Iron Golem Flowers, Brown Leather, Warm Glow, Horizontal Nuggets, Different Stems, Variated Bookshelves, Connected Bookshelves.

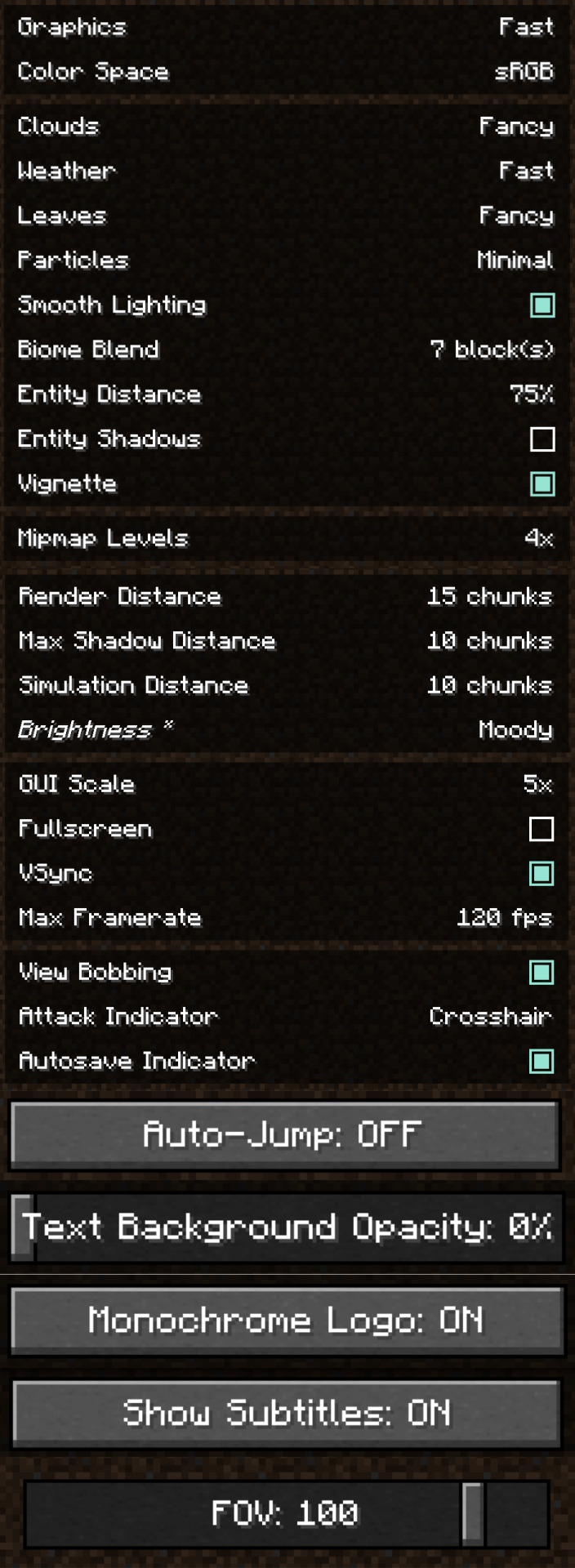

Game Options

For the sake of the full experience, here's the important game options:

The End 😇

And that's the whole thing! A lot of this could change at any moment based on my play style, but I think this is a really well rounded experience for vanilla Minecraft. Let me know if there's anything else I should try 😁

#minecraft#minecraft mods#minecraft vanilla#minecraft customization#mcyt#sodium#mods#JellySquid#ectomoog#moogposting#mineblr#minecraft survival#minecraft server#smp#minecraft smp#optifine#minecraft modding#modded minecraft

3 notes

·

View notes

Text

Breakpoint 2025: Join the New Era of AI-Powered Testing

Introduction: A Paradigm Shift in Software Testing

Software testing has always been the silent backbone of software quality and user satisfaction. As we move into 2025, this discipline is experiencing a groundbreaking transformation. At the heart of this revolution lies AI-powered testing, a methodology that transcends traditional testing constraints by leveraging the predictive, adaptive, and intelligent capabilities of artificial intelligence. And leading the charge into this new frontier is Genqe.ai, an innovative platform redefining how quality assurance (QA) operates in the digital age.

Breakpoint 2025 is not just a milestone; it’s a wake-up call for QA professionals, developers, and businesses. It signals a shift from reactive testing to proactive quality engineering, where intelligent algorithms drive test decisions, automation evolves autonomously, and quality becomes a continuous process — not a phase.

Why Traditional Testing No Longer Suffices

In a world dominated by microservices, continuous integration/continuous delivery (CI/CD), and ever-evolving customer expectations, traditional testing methodologies are struggling to keep up. Manual testing is too slow. Rule-based automation, though helpful, still requires constant human input, test maintenance, and lacks contextual understanding.

Here’s what traditional testing is failing at:

Scalability: Increasing test cases for expanding applications manually is unsustainable.

Speed: Agile and DevOps demand faster releases, and traditional testing often becomes a bottleneck.

Complexity: Modern applications interact with third-party services, APIs, and dynamic UIs, which are harder to test with static scripts.

Coverage: Manual and semi-automated approaches often miss edge cases and real-world usage patterns.

This is where Genqe.ai steps in.

Enter Genqe.ai: Redefining QA with Artificial Intelligence

Genqe.ai is a next-generation AI-powered testing platform engineered to meet the demands of modern software development. Unlike conventional tools, Genqe.ai is built from the ground up with machine learning, deep analytics, and natural language processing capabilities.

Here’s how Genqe.ai transforms software testing in 2025:

1. Intelligent Test Case Generation

Manual test case writing is one of the most laborious tasks for QA teams. Genqe.ai automates this process by analyzing:

Product requirements

Code changes

Historical bug data

User behavior

Using this data, it generates test cases that are both relevant and comprehensive. These aren’t generic scripts — they’re dynamic, evolving test cases that cover critical paths and edge scenarios often missed by human testers.

2. Predictive Test Selection and Prioritization

Testing everything is ideal but not always practical. Genqe.ai uses predictive analytics to determine which tests are most likely to fail based on:

Recent code commits

Test history

Developer behavior

System architecture

This smart selection allows QA teams to focus on high-risk areas, reducing test cycles without compromising quality.

3. Self-Healing Test Automation

A major issue with automated tests is maintenance. A minor UI change can break hundreds of test scripts. Genqe.ai offers self-healing capabilities, which allow automated tests to adapt on the fly.

By understanding the intent behind each test, the AI can adjust scripts to align with UI or backend changes — dramatically reducing flaky tests and maintenance costs.

4. Continuous Learning with Each Release

Genqe.ai doesn’t just test — it learns. With every test run, bug found, and user interaction analyzed, the system becomes smarter. This means that over time:

Tests become more accurate

Bug detection improves

Test coverage aligns more closely with actual usage

This continuous improvement creates a feedback loop that boosts QA effectiveness with each iteration.

5. Natural Language Test Authoring

Imagine writing test scenarios like this: “Verify that a user can log in with a valid email and password.”

Genqe.ai’s natural language processing (NLP) engine translates such simple sentences into fully executable test scripts. This feature democratizes testing — allowing business analysts, product owners, and non-technical stakeholders to contribute directly to the testing process.

6. Seamless CI/CD Integration

Modern development pipelines rely on tools like Jenkins, GitLab, Azure DevOps, and CircleCI. Genqe.ai integrates seamlessly into these pipelines to enable:

Automated test execution on every build

Instant feedback on code quality

Auto-generation of release readiness reports

This integration ensures that quality checks are baked into every step of the software delivery process.

7. AI-Driven Bug Detection and Root Cause Analysis

Finding a bug is one thing; understanding its root cause is another. Genqe.ai uses advanced diagnostic algorithms to:

Trace bugs to specific code changes

Suggest likely culprits

Visualize dependency chains

This drastically reduces the time spent debugging, allowing teams to fix issues faster and release more confidently.

8. Test Data Management with Intelligence

One of the biggest bottlenecks in testing is the availability of reliable, relevant, and secure test data. Genqe.ai addresses this by:

Automatically generating synthetic data

Anonymizing production data

Mapping data to test scenarios intelligently

This means tests are always backed by valid data, improving accuracy and compliance.

9. Visual and API Testing Powered by AI

Modern applications aren’t just backend code — they’re visual experiences driven by APIs. Genqe.ai supports both:

Visual Testing: Detects UI regressions using image recognition and ML-based visual diffing.

API Testing: Builds smart API assertions by learning from actual API traffic and schemas.

This comprehensive approach ensures that both functional and non-functional aspects are thoroughly validated.

10. Actionable Insights and Reporting

What gets measured gets improved. Genqe.ai provides:

Smart dashboards

AI-curated test summaries

Risk-based recommendations

These insights empower QA leaders to make data-driven decisions, allocate resources effectively, and demonstrate ROI on testing activities.

The Impact: Faster Releases, Fewer Defects, Happier Users

With Genqe.ai in place, organizations are seeing:

Up to 70% reduction in test cycle times

40% fewer production defects

3x increase in test coverage

Faster onboarding of new testers

This translates into higher customer satisfaction, reduced costs, and a competitive edge in the market.

Embrace the Future: Join the Breakpoint 2025 Movement

Breakpoint 2025 isn’t just a conference theme or buzzword — it’s a movement toward intelligent, efficient, and reliable software quality assurance. As the complexity of digital products grows, only those who embrace AI-powered tools like Genqe.ai will thrive.

Genqe.ai is more than just a tool — it’s your intelligent QA partner, working 24/7, learning continuously, and driving quality as a strategic asset, not an afterthought.

Conclusion: The Time to Act is Now

The world of QA is changing — and fast. Genqe.ai is the bridge between where your QA process is today and where it needs to be tomorrow. If you’re still relying on traditional methods, Breakpoint 2025 is your opportunity to pivot. To embrace AI. To reduce cost and increase confidence. To join a new era.

Step into the future of AI-powered testing. Join the Genqe.ai revolution.

0 notes

Text

The name Palestine, or it’s possible predecessors, have been found in five inscriptions across countries in the Levant and North Africa, all naming it Peleset or Purusati, inscribed in hieroglyphics as P-r-s-t

Their first mention is at the temple of Ramesses, 1150 BC, where Ramesses is mentioned to have fought off the Pelesets, the last known being 300 years later on Padiiset’s Statue, reading “Ka of Osiris: Pa-di-iset, the justified, son of Apy. The only renowned one, the impartial envoy of Philistine Canaan, Pa-di-iset, son of Apy.”

“Philistine” and “Canaan” both refer to the land of Palestine. The Assyrians have called it Palashtu since 800 BC, through to an Esarhaddon treaty more than a century later

The term Palestine actually comes from the writings of Greek author Herodotus in the 5th century BC, where he calls the land “Palaistine”, which is where the Arabic term “Filastin” comes from

Now, it should be noted that there is a bit of nuance in what I am about to say next - the Iron Age Kingdom of Judah did exist, and was an Israelite kingdom - this was around 960 BC to 560 BC

However, this by no means implies that Palestine is not Palestinian land - the kingdom of Judah famously fell, after all, and Israel the state was only established after political Zionism in Britain, spurred on by catastrophes like the Holocaust and pogroms, promoted the idea of a Jewish “reclaiming” of Palestine

In 1918, the Jewish Legion helped the British conquer Palestine to create the Mandatory Palestine. This caused obvious revolts, as most acts of colonialism tend to do

Over the next few decades, things went as you might know - the Holocaust meant a massive surge in Jewish refugees, most of whom were turned away by their neighbours because, well, millions of new citizens were hard to feed, and all of whom decided that Palestine was where they would go

Of course, none of this took into consideration the fact that native Palestinians would have to be displaced from their homes and livelihoods to do it, often with violence

The Holocaust was a horrible thing, obviously - but attempting to colonise a new state out of a pre-existing one, displacing and killing thousands of native Arabs, in response was hardly a good thing, either

On 22 July 1941, the Jewish militia Irgun bombed the British headquarters in Palestine, killing 91 people. This was in response to a series of raids conducted by the British ,including one on the Jewish Agency

All mediation attempts conducted over the next few years failed, as the Jewish diaspora - and more importantly, the Zionists amongst them - were unwilling to accept a solution that did not produce for them a whole new all-Jewish state in Palestine, while the native Arabs were somewhat pissed at the idea of having to leave the land of their birth due to colonialism

And it was colonialism - Zionist colonialism is still colonialism

Finally, the matter was brought before the UN, who decided to go with the Zionist idea of a separate Jewish and Arab state, with Jerusalem as an international city between them

The Irgun and other Revisionary Zionist groups rejected the idea, seeing it as an act of ceding native Jewish land. The leader of the Irgun at the time, Menachem Begin, openly stated that “the bisection of our homeland is illegal. It will never be recognized” and that the Zionists fully intended on expanding beyond their UN-set boundaries “after the shedding of much blood.”

Simha Flapan, the Israeli scholar, states that it is a myth that any Zionist groups at all accepted the partition, as most of them simply wanted to undermine the creation of an Arab state, fully intending on expanding into them later

Baruch Kimmerling, a professor at the University of Jerusalem, has stated that Zionists “officially accepted the partition plan, but invested all their efforts towards improving its terms and maximally expanding their boundaries while reducing the number of Arabs in them”

So, yes, the Zionist myth of a peaceful, unambitious Israel is exactly that - a myth

The Arabs had a much more open reaction, rejecting the plan outright. Zionists will tell you this is because they hated Jews. It’s not. Their principle argument was that it was against the principles of national self-determination set out in the UN charter, granting them the right to decide their own destiny

In any case, over on the Zionist side of things, Jewish insurgency peaked until Irgun took two British sergeants hostage as attempted leverage against the planned execution of three Irgun officers. The officers were executed as planned, and the sergeants were murdered and hung from trees. Spurred by this, the British called for an evacuation of the Mandatory Palestine

On 26 November 1947, the UN General Assembly presented another plan for the partition of Palestine, evidently fully ignoring what the actual Palestinians had to say. In this, they assigned 56% of Palestine (notably, Arab land that already had Arab landowners) to the Jewish diaspora, who had so far owned 6-7% of the land

Arabs, the majority, held 20% of the land, and the remaining 23-24% was granted to Mandate authorities and foreign entities

Once again, the native Arabs rejected this. Not because they wanted to kill Jews. But because it clearly benefitted European interests over Palestinian ones, and was thus unacceptable

Afterwards, Israel had its way and declared independence once Mandatory Palestine was dissolved. The neighbouring Arab states, formed the Arab League, and invaded, with the goal of ensuring the prevention of the Partition

To make a long story short, they failed, and Israel annexed massive parts of Palestine in the process. The native Palestinians were then expelled from the homelands by the Zionist militias in a bloody and brutal process that would come to be known as Al-Nakba

In addition, this led to the destruction of Palestinian culture, identity, and national aspirations. They were no longer “Palestinians” - they were the expelled and discarded. Their settlements were either destroyed or given new Hebrew names

In the process the Israelis would also start their genocide against the Palestinians with a bang - the poisoning of village wells and other acts of biological warfare.

750,000 Palestinians were expelled from their ancestral homes and towns - 80% of the population living in what would become Israel. Eleven Arab towns and cities, along with over 500 villages

It’s believed that around 70 massacres occurred during the Nakba, with the Yishuv killing over 800 -Arab civilians over the course of 24 of them. Other sources state that there were 10 major massacres with over 50 casualties each

The Saliha Massacre ended with 70 dead, the Deir Yassin with 112, the Lydda with 250, the Tantira with 200+ and the Abu Shusha massacre with 70 dead

And those are just the major massacres, as recognised by the Israeli historian Benny Morris. There are plenty more. Each death a living, breathing, person, whose life and death are being erased by the acts of the government of Netanyahu

The nakba, which began on 15 May, 1948, is considered to be still ongoing today, with new deaths and massacres occurring everyday. The US support Israel has enjoyed, along with their own technological breakthroughs, has only made it more effective at destruction

All to make room for a state that, I will remind you, does not have any right to exist in Palestine. It is true that there was a Jewish kingdom in Palestine once upon a time. But there was a Muslim kingdom in Spain once, too, and no one’s arguing for Arabians to go colonise Spain

The Palestinians have existed in Palestine since at least the Bronze Age, being the descendants of the Ancient Levantines extending back to the inhabitants of the Levant at the time

According to the Palestine historian Nazmi Al-Ju'beh, like in other Arab nations, the Arab identity of Palestinians, largely based on linguistic and cultural affiliation, is independent of the existence of any actual Arabian origins

The Palestinians are the indigenous population of the land of Palestine. To displace and genocide them to make room for your own colony is not unlike what China is doing in East Turkestan or what America did to the Native Americans

All that to say - the Zionists did in Palestines what every racist and Islamophobe seems to think Muslims are trying to do in Britain

#Israel#palestine#free palestine#save palestine#i-p conflict#israel palestine conflict#israel palestine war#nakba#history#nakba 1948

1 note

·

View note

Text

What Web Development Companies Do Differently for Fintech Clients

In the world of financial technology (fintech), innovation moves fast—but so do regulations, user expectations, and cyber threats. Building a fintech platform isn’t like building a regular business website. It requires a deeper understanding of compliance, performance, security, and user trust.

A professional Web Development Company that works with fintech clients follows a very different approach—tailoring everything from architecture to front-end design to meet the demands of the financial sector. So, what exactly do these companies do differently when working with fintech businesses?

Let’s break it down.

1. They Prioritize Security at Every Layer

Fintech platforms handle sensitive financial data—bank account details, personal identification, transaction histories, and more. A single breach can lead to massive financial and reputational damage.

That’s why development companies implement robust, multi-layered security from the ground up:

End-to-end encryption (both in transit and at rest)

Secure authentication (MFA, biometrics, or SSO)

Role-based access control (RBAC)

Real-time intrusion detection systems

Regular security audits and penetration testing

Security isn’t an afterthought—it’s embedded into every decision from architecture to deployment.

2. They Build for Compliance and Regulation

Fintech companies must comply with strict regulatory frameworks like:

PCI-DSS for handling payment data

GDPR and CCPA for user data privacy

KYC/AML requirements for financial onboarding

SOX, SOC 2, and more for enterprise-level platforms

Development teams work closely with compliance officers to ensure:

Data retention and consent mechanisms are implemented

Audit logs are stored securely and access-controlled

Reporting tools are available to meet regulatory checks

APIs and third-party tools also meet compliance standards

This legal alignment ensures the platform is launch-ready—not legally exposed.

3. They Design with User Trust in Mind

For fintech apps, user trust is everything. If your interface feels unsafe or confusing, users won’t even enter their phone number—let alone their banking details.

Fintech-focused development teams create clean, intuitive interfaces that:

Highlight transparency (e.g., fees, transaction histories)

Minimize cognitive load during onboarding

Offer instant confirmations and reassuring microinteractions

Use verified badges, secure design patterns, and trust signals

Every interaction is designed to build confidence and reduce friction.

4. They Optimize for Real-Time Performance

Fintech platforms often deal with real-time transactions—stock trading, payments, lending, crypto exchanges, etc. Slow performance or downtime isn’t just frustrating; it can cost users real money.

Agencies build highly responsive systems by:

Using event-driven architectures with real-time data flows

Integrating WebSockets for live updates (e.g., price changes)

Scaling via cloud-native infrastructure like AWS Lambda or Kubernetes

Leveraging CDNs and edge computing for global delivery

Performance is monitored continuously to ensure sub-second response times—even under load.

5. They Integrate Secure, Scalable APIs

APIs are the backbone of fintech platforms—from payment gateways to credit scoring services, loan underwriting, KYC checks, and more.

Web development companies build secure, scalable API layers that:

Authenticate via OAuth2 or JWT

Throttle requests to prevent abuse

Log every call for auditing and debugging

Easily plug into services like Plaid, Razorpay, Stripe, or banking APIs

They also document everything clearly for internal use or third-party developers who may build on top of your platform.

6. They Embrace Modular, Scalable Architecture

Fintech platforms evolve fast. New features—loan calculators, financial dashboards, user wallets—need to be rolled out frequently without breaking the system.

That’s why agencies use modular architecture principles:

Microservices for independent functionality

Scalable front-end frameworks (React, Angular)

Database sharding for performance at scale

Containerization (e.g., Docker) for easy deployment

This allows features to be developed, tested, and launched independently, enabling faster iteration and innovation.

7. They Build for Cross-Platform Access

Fintech users interact through mobile apps, web portals, embedded widgets, and sometimes even smartwatches. Development companies ensure consistent experiences across all platforms.

They use:

Responsive design with mobile-first approaches

Progressive Web Apps (PWAs) for fast, installable web portals

API-first design for reuse across multiple front-ends

Accessibility features (WCAG compliance) to serve all user groups

Cross-platform readiness expands your market and supports omnichannel experiences.

Conclusion

Fintech development is not just about great design or clean code—it’s about precision, trust, compliance, and performance. From data encryption and real-time APIs to regulatory compliance and user-centric UI, the stakes are much higher than in a standard website build.

That’s why working with a Web Development Company that understands the unique challenges of the financial sector is essential. With the right partner, you get more than a website—you get a secure, scalable, and regulation-ready platform built for real growth in a high-stakes industry.

0 notes

Text

How a Network Load Balancer Maximizes Uptime and Performance Across Traffic Spikes?

A network load balancer is a critical component in today’s digital infrastructure, especially for businesses that rely heavily on web applications, APIs, or cloud-based services. It ensures the seamless distribution of incoming network traffic across multiple servers, preventing any single server from becoming overwhelmed. By intelligently managing traffic, a network load balancer enhances the efficiency, reliability, and scalability of your system. In the era of 24/7 digital access and unpredictable surges in traffic, this technology is indispensable for maintaining performance. Whether for e-commerce, finance, gaming, or media platforms, a network load balancer plays a central role in maintaining service continuity.

Ensures High Availability During Peak User Demand

During times of peak demand—such as sales events, product launches, or viral content spikes—a network load balancer ensures high availability by evenly routing requests to healthy, responsive servers. It prevents bottlenecks and ensures users get uninterrupted access, regardless of how many are connected simultaneously. Without a load balancer, even the most robust infrastructure can crumble under pressure. By maintaining service availability even during high traffic, a network load balancer helps uphold user satisfaction and brand reputation. It acts as a strategic buffer that intelligently controls traffic surges to maintain a consistent user experience without slowing down or crashing systems.

Balances Traffic Loads Across Multiple Servers

A key function of a network load balancer is to distribute workloads across multiple servers, ensuring no single resource is overburdened. This improves server utilization and reduces the risk of hardware failure due to excessive strain. When configured correctly, a network load balancer continuously monitors server performance and redirects requests based on real-time capacity and responsiveness. This balancing act is vital in maintaining operational stability across the infrastructure. Whether handling millions of API calls or hundreds of database queries per second, the ability of the load balancer to evenly allocate traffic keeps applications responsive and infrastructure costs optimized.

Minimizes Downtime with Real-Time Failover Support

One of the most valuable benefits of a network load balancer is its real-time failover capability. When a server goes offline due to hardware failure, overload, or scheduled maintenance, the load balancer detects this instantly and reroutes traffic to other healthy nodes. This automated process minimizes downtime and ensures business continuity. Failover support not only protects against unexpected server failures but also allows system administrators to perform updates and maintenance without disrupting user access in high-availability systems where every second of uptime matters. Having a network load balancer with failover functionality is crucial to delivering uninterrupted service.

A Network Load Balancer Distributes Traffic Efficiently

Efficiency is at the core of what a network load balancer delivers. It evaluates incoming traffic, analyzes the state of backend servers, and makes quick routing decisions to distribute data streams where they are most efficiently handled. This not only reduces latency but also maximizes resource utilization and processing speed. By employing algorithms like round-robin, least connections, or IP-hash-based distribution, the network load balancer ensures optimized delivery paths. Efficient distribution of requests directly correlates with improved user experiences and smoother application workflows. Whether traffic is steady or erratic, the network load balancer keeps the system operating at peak efficiency.

Adapts to Traffic Spikes with Scalable Routing

A modern network load balancer can dynamically adapt to sudden traffic spikes by leveraging scalable routing techniques. When user requests surge, it quickly allocates new server resources, often within cloud environments, to handle the increase without slowing down performance. This auto-scaling behavior is essential for applications that experience fluctuating demand, such as e-commerce platforms during holiday seasons or streaming services during live events. The ability to adjust routing rules in real-time allows the load balancer to maintain high throughput and low latency even under stress. This adaptability ensures that services remain stable, responsive, and available when traffic demands fluctuate rapidly.

Enhances Application Performance During Usage Surges

Application performance can degrade rapidly under sudden user load, but a network load balancer plays a pivotal role in preventing such degradation. By offloading tasks to the least busy servers and ensuring traffic is intelligently split, it eliminates performance bottlenecks. This results in faster response times, smoother application behavior, and overall better user satisfaction. Load balancers can also integrate with performance monitoring tools to detect slowdowns and reroute requests proactively. This continuous feedback loop helps maintain consistent application quality. When usage surges occur, the network load balancer becomes the silent hero that keeps your applications running fast and efficiently.

A Network Load Balancer Improves Uptime and Reliability

Uptime and reliability are non-negotiable in mission-critical applications. A network load balancer significantly contributes to both by continuously monitoring server health and distributing requests only to available nodes. This eliminates single points of failure and allows redundant infrastructure to operate seamlessly. Load balancers often include SSL termination and TCP optimization features, further improving speed and reducing the load on backend servers. With constant real-time monitoring and traffic control, the network load balancer provides a robust safety net against outages. By improving uptime and ensuring predictable reliability, it becomes a cornerstone of any enterprise-grade IT strategy and system architecture.

Conclusion

In today’s fast-paced digital world, a network load balancer is essential for maintaining high performance, availability, and reliability of online services. Whether managing thousands of users or supporting real-time applications, load balancers ensure that servers work smarter, not harder. They reduce downtime, optimize resource usage, and offer seamless scalability to meet unpredictable user demand. Investing in a network load balancer is not just a technical upgrade—it’s a strategic move toward delivering a superior user experience and safeguarding digital infrastructure. For any business aiming to stay competitive, implementing a network load balancer is a vital step toward operational excellence.

0 notes

Text

How Address Verification APIs Help Prevent Fraud and Reduce Failed Deliveries

Inaccurate addresses are more than just logistical problems—they’re gateways to financial loss, fraud, and customer dissatisfaction. Address verification APIs are the silent workhorses behind successful shipping, billing, and identity verification. This article explores how they safeguard your business.

What Is an Address Verification API?

An Address Verification API automatically checks and validates addresses during data entry or prior to shipment. It corrects typos, standardizes formats, and flags incomplete or fraudulent addresses.

How Fraud Happens Without Address Validation

Fake Addresses Used for Promotions

Stolen Credit Cards with Mismatched Addresses

Synthetic Identities for Loan or Benefit Fraud

Shipping to Abandoned Warehouses

Fraud Prevention Benefits

Address Matching with Payment Methods

Geolocation Cross-Checks

Instant Blacklist Notifications

Reduced Chargebacks and Identity Fraud

Failed Deliveries: Hidden Costs

Every failed delivery incurs:

Reshipment fees

Wasted materials

Customer support time

Lower satisfaction scores

How Address Verification APIs Reduce Delivery Failures

Autocorrect Minor Typos

Suggest Valid Alternatives

Ensure Address Completeness

International Format Normalization

Address Verification vs. Address Autocomplete

FeatureVerification APIAutocomplete APIPurposeValidate + CorrectSuggest + CompleteBest ForShipping, ComplianceCheckout UXPrevents Fraud?YesNo

Popular Address Verification API Providers

Lob

Smarty

PostGrid

Loqate

Melissa

Compliance and Security Benefits

PCI DSS Alignment

HIPAA for Healthcare

GDPR for European Data

Data Encryption and Logging

Industry Use Cases

E-commerce: Reduces return-to-sender rates

Banking: Validates KYC (Know Your Customer) addresses

Insurance: Ensures property address accuracy

Logistics: Reduces routing and delivery errors

Integration Workflow

Capture Address

Call API

Receive Standardized + Validated Address

Update CRM or Proceed to Checkout

Sample API Call

jsonCopy

Edit

{ "address_line1": "742 Evergreen Ter", "city": "Springfield", "country": "USA", "zip": "62704" }

Output Response

jsonCopy

Edit

{ "status": "verified", "suggested_correction": "742 Evergreen Terrace, Springfield, IL 62704" }

Best Practices for Implementation

Validate before checkout

Offer user overrides only if confidence score is high

Show real-time correction suggestions

Measuring API Effectiveness

Failed Delivery Rate

Fraud Detection Rate

Customer Satisfaction Score

Cost Per Delivery

Conclusion: Protecting Your Bottom Line

Address Verification APIs are not just optional—they’re essential. Preventing fraud, reducing delivery errors, and enhancing customer trust starts with clean, validated data. Integrate it right, and you’ll avoid the costs of wrong addresses and bad actors.

youtube

SITES WE SUPPORT

Check Postcard With API – Wix

0 notes

Text

GQAT Tech’s QA Arsenal: Powerful Tools That Make Quality Assurance Smarter

In this technology-driven age, delivering high-quality software is not an optional function but a necessity. Clients now expect us to produce digital products— apps, websites, etc.-- that are made quickly and error-free. The best way to achieve this is with a quality assurance (QA) process and effective tools to adapt to their needs.

The GQAT Tech QA team calls their QA efficient and effective tools combined, and their QA arsenal productive platforms, automation, tools, and proven processes. These testing tools and approaches can help a QA team find bugs more quickly, decrease hands-on effort, and allow more test coverage depending on the software project.

Now, let's look at what a QA Arsenal is, why it is important, and how QA will help your business produce better software.

What is the QA Arsenal?

The "QA Arsenal" refers to the collection of tools, frameworks, and strategies at GQAT Tech that the QA team employs to provide quality testing to clients. The QA Arsenal is like a toolbox, where everything a tester needs to complete the project is in the toolbox.

It includes:

Automation testing tools

Manual testing techniques

Defect tracking systems

Performance testing platforms

Mobile and web testing tools

CI/CD integrations

Reporting and analytics dashboards

What Makes GQAT’s QA Arsenal Unique?

We do not use tools haphazardly. We use the most appropriate tools based on the client's type of project, technology stack, service timeline, and quality. The QA Arsenal is designed to offer flexibility and therefore considers:

Web apps

Mobile apps

Embedded systems

IoT devices

Enterprise software

Whether functional test, compatibility test, API test, performance test, GQAT Tech utilizes a custom mixture of tools to ensure that it is reliable, secure, and ready for launch.

Tools Included in the QA Arsenal

Here are some common tools and platforms GQAT Tech uses:

🔹 Automation Tools

Selenium – For web application automation

Appium – For mobile app automation

TestNG / JUnit – For running and managing test cases

Robot Framework – For keyword-driven testing

Postman – For API testing

JMeter – For performance and load testing

🔹 Defect & Test Management

JIRA – To log, track, and manage bugs

TestRail / Zephyr – For test case management

Git & Jenkins – For CI/CD and version control

BrowserStack / Sauce Labs – For cross-browser and device testing

How It Helps Clients

Using the QA Arsenal allows GQAT Tech to:

Detect Bugs Early – Catch issues before they reach end-users

Save Time – Automation reduces time spent on repetitive tasks

Test on Real Devices – Ensures compatibility across systems

Generate Reports – Easy-to-read results and test status

Integrate with DevOps – Faster release cycles and fewer rollbacks

Improve Product Quality – Fewer bugs mean a better user experience

Real Results for Real Projects

GQAT Tech’s QA Arsenal has been successfully used across different domains like:

FinTech

Healthcare

E-commerce

Travel & Transport

EdTech

AI and IoT Platforms

With their domain expertise and knowledge of tools, they help businesses go faster, mitigate risks, and build customer diligence.

Conclusion

Building a great QA team is essential, but having them equipped with the right tools makes all the difference. GQAT Tech’s QA Arsenal provides their testers with everything they need to test faster, smarter, and more comprehensively.

If you are building a digital product and want to ensure it is released in the real world, you need a testing partner who does not leave things to chance. You need a testing partner with a battle-tested QA arsenal.

💬 Ready to experience smarter quality assurance?

👉 Explore GQAT Tech’s QA Arsenal and get in touch with their expert team today!

#QA Arsenal#Software Testing Tools#Quality Assurance Strategies#Automation Testing#Manual Testing#Selenium#Appium#Test Management Tools#CI/CD Integration#Performance Testing#Defect Tracking#Cross-Browser Testing#GQAT Tech QA Services#Agile Testing Tools#End-to-End Testing

0 notes

Text

Optimizing Flutter App Performance: Techniques and Tools

An app’s performance plays a crucial role in shaping the user experience. Today’s users expect mobile apps to load instantly, scroll seamlessly, and respond immediately to every touch. And when you're working with Flutter App Development, performance isn't just a luxury — it's a necessity.

At Siddhi Infosoft, we specialize in delivering top-notch Flutter Application Development Services. Over the years, we've worked on a wide range of Flutter apps — from sleek MVPs to full-featured enterprise apps. One thing we’ve learned: performance optimization is an ongoing process, not a one-time task.

In this blog, we’re diving deep into how to optimize your Flutter app’s performance using proven techniques and powerful tools. Whether you’re a developer or a business looking to fine-tune your Flutter app, these insights will guide you in the right direction.

Why Flutter App Performance Matters

Flutter offers a single codebase for both Android and iOS, fast development cycles, and beautiful UIs. But like any framework, performance bottlenecks can creep in due to poor coding practices, heavy widget trees, or inefficient API calls.

Here’s what poor performance can lead to:

High uninstall rates

Negative user reviews

Low engagement and retention

Decreased revenue

That’s why performance optimization should be a key pillar of any Flutter App Development strategy.

Key Techniques to Optimize Flutter App Performance

1. Efficient Widget Tree Management

Flutter revolves around widgets — from layout design to app logic, everything is built using widgets. But deep or poorly structured widget trees can slow things down.

What to do:

Use const constructors wherever possible. Const widgets are immutable, meaning they don’t change and won’t rebuild unless absolutely necessary

Avoid nesting too many widgets. Refactor large build methods into smaller widgets.

Prefer ListView.builder over building a list manually, especially for long lists.

2. Minimize Repaints and Rebuilds

Excessive UI rebuilds consume CPU resources and slow the app.

Pro tips:

Use setState() judiciously. Calling it in the wrong place can cause the whole widget tree to rebuild.

Use ValueNotifier or ChangeNotifier with Provider to localize rebuilds.

Use the shouldRepaint method wisely in CustomPainter.

3. Lazy Loading of Assets and Data

Loading everything at once can cause UI jank or app freezing.

Optimization tips:

Lazy load images using CachedNetworkImage or FadeInImage.

Use pagination when loading data lists.

Compress images before bundling them with the app.

4. Efficient Use of Animations

Animations add to UX, but if not optimized, they can slow the frame rate.

Best practices:

Use AnimatedBuilder and AnimatedWidget to optimize custom animations.

Avoid unnecessary loops or timers within animations.

Keep animations simple and leverage hardware-accelerated transitions for smoother performance and better efficiency.

5. Reduce App Size

Lighter apps load faster and use less memory.

How to reduce size:

Remove unused assets and libraries.

Use flutter build apk --split-per-abi to generate smaller APKs for different architectures.

Use ProGuard or R8 to minify and shrink the Android build.

Tools to Boost Flutter App Performance

Now that we’ve covered the techniques, let’s explore some tools that every Flutter Application Development Services provider should have in their toolkit.

1. Flutter DevTools

Flutter comes with a built-in suite of performance and debugging tools to help you monitor, optimize, and troubleshoot your app efficiently.

What it offers:

UI layout inspection

Frame rendering stats

Memory usage insights

Timeline performance tracking

How to use: Run flutter pub global activate devtools, then launch it via your IDE or terminal.

2. Performance Overlay

Quickly visualize your app's rendering performance.

How to activate:

dart

CopyEdit

MaterialApp(

showPerformanceOverlay: true,

...

)

What it shows:

Red bars indicate UI jank or frame drops.

Helps detect where the app is not maintaining 60fps.

3. Dart Observatory (now part of DevTools)

This is useful for:

CPU profiling

Memory leaks detection

Analyzing garbage collection

It’s especially valuable during long testing sessions.

4. Firebase Performance Monitoring

Ideal for monitoring production apps.

Why use it:

Monitor real-world performance metrics

Track slow network requests

Understand app startup time across devices

At Siddhi Infosoft, we often integrate Firebase into Flutter apps to ensure real-time performance tracking and improvement.

5. App Size Tool

Use flutter build --analyze-size to identify which packages or assets are increasing your app’s size.

This helps you:

Trim unnecessary dependencies